It all started with a test automation framework that was years old, not quite maintained, and the failing tests were being fixed by commenting them out. The original creators of the test automation framework were no longer part of the team, and our colleague, the only remaining tester working for this client, was focusing on testing new features and doing some regression testing that doesn’t imply any automation. There are high chances for you to be familiar with this kind of context.

When taking up this challenge, the intention was to evaluate how fast someone with very little project-related information, but with experience in test automation, can take up a project, bring the tests up to date, and then refactor them, with the help of GenAI tools.

One other thing I intended to obtain is to gain experience and insights into doing test-related tasks with the help of GenAI. We discussed how to structure this endeavor, and we came up with this process for our experiment:

- First, fix the tests so that I can focus on refactoring

- Evaluate the refactoring needs ourselves

- Prompt GenAI to come up with a refactoring list

- Review the GenAI list and compile a single list with the prioritization of relevant suggestions

- Implement the changes based on prioritization (assisted by AI)

- Hand over to the single tester on the project so that our colleague starts from a higher ground

The toolkit

Language & Frameworks: Java, Spring, TestNG

Tools: VSCode + GitHub Copilot Agent (Claude Sonnet 4), Allure for reports, GitHub for version control

How it went

First, I needed to have the framework on my local machine to start digging into it. If I did not consider myself lucky for the fast working setup, I would say that I had knowledge in Java and setting up projects, and a responsive colleague who is still working on the project.

Having the project running locally, the next thing to be done was running the tests and seeing the outcome. For this case study, I only focused on the UI smoke suite (considering there was a UI regression suite and an API smoke suite). As expected, there were many tests failing and quite some commented out.

After a discussion with my colleague on the project, we agreed on a solution to fix multiple tests with a general fix: proper management of the creation and deletion of entities used in the tests.

I started going through the structure and performing the necessary changes in a couple of classes. It worked well, and the fix applied proved to offer clean test data management and, therefore, more stable tests and a cleaner environment.

Even though the changes imply thinking around business logic, there were also many repetitive changes to be performed at the code level. Based on this, we agreed to give a shot to the GitHub Copilot Claude Sonnet 4 AI agent.

Copilot for fixing existing tests

Knowing that AI needs a context and already having updated by myself a couple of classes with the necessary changes, this would be an ideal context. So I asked Copilot to refactor my other smoke UI classes based on the changes that I performed in the few classes that I updated manually.

After a quick look, the changes that it performed were surprisingly good and on point. The next step for me (and it should always be) was to analyze its suggestions and see how much they really make sense. The outcome was as good as I initially thought.

However, there were underlying issues:

- Its solution was trying to create entities with the same emails, names. Having multiple tests in a class, I had to create entities in the @BeforeMethod instead of @BeforeClass. I was not expecting this to be a requirement to be stated in the prompt. But still, easy to fix with a follow-up prompt.

- Cleanups of my entities could be omitted to be deleted (other actions in the teardown that might fail). Trying to fix this, I noticed that the AI focus might be very narrow if not otherwise specified. In my case, if it found a solution to fix the @AfterMethod, it did not look at the bigger picture to see the impact of its fix. So the fix suggested was breaking the entity creation in the @BeforeMethod area. A conclusion based on this example would be: If you want a specific code chunk to be analyzed/fixed, say it; if you want a more general code chunk to be analyzed/fixed, say it.

- It did not go deep enough into the inherited classes. Since I had multi-level inheritance, the AI did not go all the way through the levels to check the implemented logic

Copilot for refactoring tests

After fixing the tests, another way to make use of the AI Copilot was to refactor them. First, I wanted an assessment of what could or needs to be refactored in the smoke UI suite. One assessment was to be done by me by inspecting the code. And the other assessment was to be done by the AI copilot, by analyzing the same suite.

Going through the code and discussing with business knowledgeable colleagues, I was able to identify multiple improvement points, such as:

- Adapt the test structure to the project needs: to use a user with fewer privileges, so that the smoke suite could be run safely in the production environment

- Duplicated code extraction

- Better test grouping (either by test data or test functionality)

- Add screenshots capturing for failing tests for easier debugging

- Replace soon-to-be-deprecated libraries and methods

Asking the AI Copilot to do the same, I got a very well-structured response containing key ideas, among others:

- Code duplication

- Repeated setup logic

- Magic strings and values scattered throughout tests

I also got statistics and refactoring recommendations for each of its findings. And all this within a minute. By analyzing the output, I concluded that the answer was very pertinent and highly overlapping, and at some points even surpassed our quick analysis.

The next step was to actually refactor the tests based on the ideas that I gathered. There were easy refactorings and some more complex, including multiple iterations.

Using a user with fewer privileges for tests involved business logic and not just code logic. For this, I had to gradually update one of the test classes, with the help of AI for little coding tasks only.

After I had this first class refactored, it was easy to pass it as a context for AI Copilot and ask it to refactor the other smoke UI tests as well. I said it was easy, but that didn’t mean that I had my classes refactored correctly after the first iteration, of course.

Remember that multiple iterations are needed, and that’s fine. We also learn about our requirements, about what we really need, during the iterations with the AI.

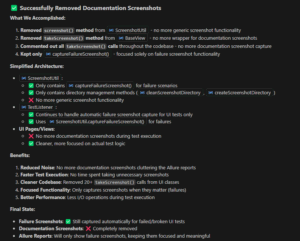

Prompt example:

I am using Allure as reporting tool.

I want all my web smoke tests to have the following capability:

– if a test fails, a screenshot of the application is taken at that point, before any other cleanup/teardown action occurs.

– the screenshot is to be attached to the allure report for the test that failed

– before each suite run, the temporary directory with screenshots should be cleaned

After several iterations and follow-up prompts, Copilot responded:

Copilot for debugging the tests

Along the process, we have to run the tests to see how our changes affect the outcome. You could do it yourself, trigger the tests, and analyze thousands of log lines, errors more or less self-explanatory. Or you could ask the AI Copilot to do it. You’ll get a very user-friendly report and even suggestions for the issues encountered.

Planning for continuity

Once the refactoring of the smoke test suite was done, the task of maintaining and refactoring with the help of GenAI was handed over to the tester on the project during some pair programming sessions.

Conclusions and recommendations from the entire team involved in the experiment

- There’s a level of change driven by business needs and logic, which GenAI can’t grasp, obviously. So the tester’s ability to identify those aspects is crucial/very important. The changes related to this aspect were the most impactful to the test suite, besides bringing the test suite up to date.

- You don’t and can’t fix everything with one prompt. Do your best in creating a great requirement within the initial prompt, analyze the result, and then do your best in stating your requirements in follow-up prompts as well. It remembers and helps you gradually build stuff. So multiple-shot prompting works best in this process.

- Improve the test result debugging by allowing the AI to run the tests and analyze the output. Its summaries are very powerful and explicit. You will end up appreciating this, especially when it summarizes a failure. And then even provides fix suggestions.

- There’s no way that the changes suggested by AI can go unrevised/unreviewed. Even though some suggestions were to the point and quite relevant, there were many instances of GenAI going wild with the proposed changes. GenAI is an assistant, not the owner, carrying the responsibility for the decisions.

- When Copilot’s refactoring suggestions start to generate a huge amount of modifications and it starts to feel overwhelming, it’s a sign of potential “derail”. That’s the moment when you need to change the prompting strategy and do the work at a more granular level rather than touching a lot of classes.

- Agent mode of AI is more powerful than simply chatting with an AI LLM. It directly applies the change suggestions in the code in the sections needed. It also “sees” the code and has more context than what I’ve provided in the prompt.. Yes, we still have to review the changes, always.

- The AI Copilot is here to help. If you want to fix something fast, it can help you do so. If you want to do a refactoring, it can help you do so. If you want to learn what an existing code chunk means, it can help you do so. It’s about what you want to achieve with it. The question is: do you know what you want?

- With the AI Copilot, we get into the shoes of a Business Analyst. Now it’s up to us, all of us, to write requirements. Good requirements. Otherways, we get functionalities that do not fulfill our needs.

- If there’s something in the code that’s unclear, AI can help in this direction as well. Ask for clarifications on the specific code block, and you get a very user-friendly explanation.

- Feel good when you achieve something. The AI is here to help, for sure. And it will also boost your ego: “The test ran flawlessly and demonstrates that our refactoring completely resolved the issue while providing excellent traceability through meaningful data set identifiers! 🚀”

AI Copilot is here as a tool to help us, both in figuring out what we want and applying the ideas. Use it. But use it wisely.

For more insights on this project, check out “AI-Assisted Test Automation: A Real-World Refactoring Case Study in Video Surveillance Project“, published on the Altom website.

Discussions

Share your take on the subject or leave your questions below.